Optimization

ACO for multi-objective optimization problems

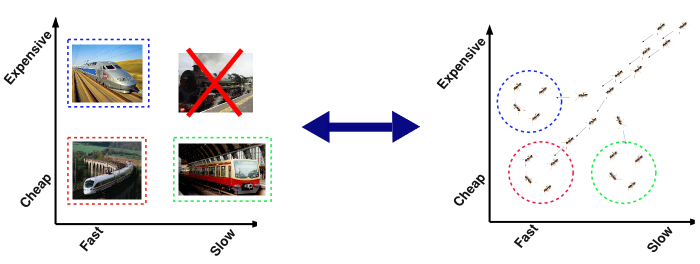

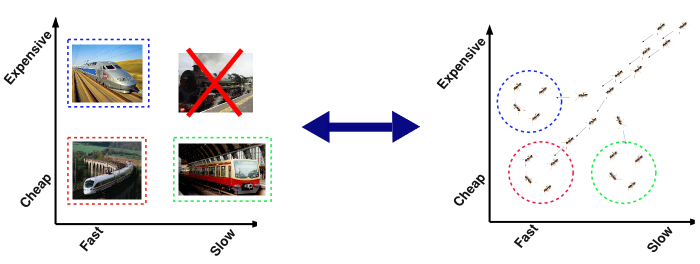

Multiobjective optimization problems are problems with several, typically conflicting, criteria for evaluating solutions. Without any a priori preference information, the Pareto optimality principle establishes a partial order among solutions, and the output of the algorithm becomes a set of nondominated solutions rather than a single one. Various ant colony optimization (ACO) algorithms have been proposed in recent years for solving such problems. These multiobjective ACO (MOACO) algorithms exhibit different design choices for dealing with the particularities of the multiobjective context. In our research, we have experimentally analyzed the available MOACO algorithms and proposed a framework for MOACO algorithms that implements algorithmic components from many of the available MOACO algorithms. This framework can be used to describe most MOACO algorithms proposed so far. It also shows that existing MOACO algorithms often share equivalent design choices, but they are described in different terms. In addition, this algorithmic framework allows to instantiate new MOACO algorithms that were never studied in the literature. The flexibility of the proposed MOACO framework also facilitates the application of automatic algorithm configuration techniques and our experimental results show that the automatically configured MOACO framework outperforms existing MOACO algorithms for several problems such as the bi-objective traveling salesperson problem or bi-objective knapsack. Currently, extensions of the framework to more than two objectives are explored.

- An Experimental Analysis of Design Choices of Multi-objective Ant Colony Optimization Algorithms.

Lopez-Ibanez M., Stützle T. - Swarm Intelligence, Volume 6, Number 3, Pages 207-232, 2012.

- The Automatic Design of Multi-Objective Ant Colony Optimization Algorithms.

Lopez-Ibanez M., Stützle T. - IEEE Transactions on Evolutionary Computation, Volume 16, Number 6, Pages 861-875, 2012

- Automatic Generation of Multi-objective ACO Algorithms for the Bi-objective Knapsack

Bezerra L.C.T., Lopez-Ibanez M., Stützle T. - In Swarm Intelligence (ANTS 2012), M. Dorigo and others (editors), Springer-Verlag, Pages 37-48, Volume 7641, Lecture Notes in Computer Science, 2012

ACO for dynamic optimization problems

Dynamic optimization problems are problems where the objective function, the constraints, or the problem data may change while implementing a solution. In previous research, several proposals of how to adapt the ACO algorithms to dynamic problems have been made, in part with considerable success. In our ongoing research, we examined the convergence speed of various ACO algorithms towards high-quality solutions as fast converging algorithms may be crucial to adapt quickly to modified situations. In a second step, we are comparing several of the proposed strategies to adapt ACO algorithms to dynamic problems using as a common testbed the dynamic traveling salesperson problem and dynamic vehicle routing problems. A second stream of work considers a very different setting, the dependence of algorithm parameters on the progress of the search. In particular, the search status of an algorithm can be seen as changing and algorithm parameter settings may need to be dynamically adapted to provide best search progress. Our research here focuses on the examination of adaptation strategies for ACO algorithm parameters.

- A Detailed Analysis of the Population-based Ant Colony Optimization Algorithm for the TSP and the QAP

Oliveira S., Bin Hussin M.S., Stützle T., Dorigo M.

In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO-2011), ACM Press, Pages 13-14, 2011

- A Critical Analysis of Parameter Adaptation in Ant Colony Optimization.

Pellegrini P., Stützle T., Birattari M. - Swarm Intelligence, Volume 6, Number 1, Pages 23-48, 2012

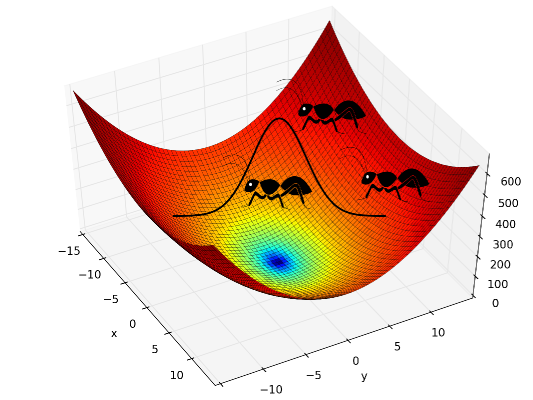

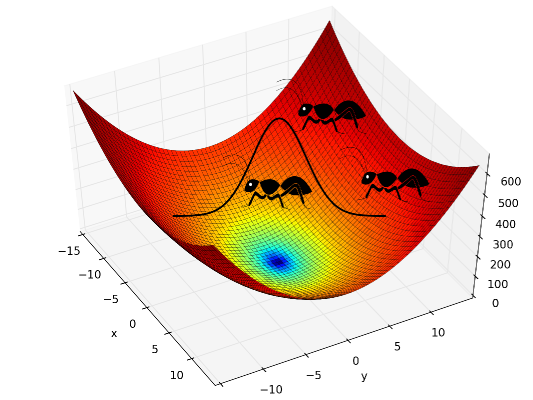

ACO for continuous optimization problems

Socha and Dorigo have proposed an ACO algorithm for continuous domains, called ACOR. The central idea underlying ACOR is to replace the discrete probability distribution used in the solution construction of ACO algorithms for combinatorial problems with probability density functions (PDFs) for constructing solutions in the continuous domain. In recent research, we have proposed a number of extensions of ACOR. A first extension is the IACOR-LS algorithm, which uses a different population management than ACOR, features a growing solution archive, and it considers the inclusion in ACOR of local search algorithms for improving the solutions constructed by the artificial ants. A second extension is the generation of the UACOR framework; this framework comprises algorithmic components from other ACO algorithms for continuous domains and can thus be seen as an algorithmic framework for continuous ACO algorithms. The design of UACOR allows the usage of automatic algorithm configuration techniques to automatically derive new, high-performing ACO algorithms for continuous optimization. A comprehensive experimental evaluation of automatically configured algorithms from the UACOR framework has shown that these are not only a significant improvement over the original ACOR, but that they are also competitive with the state-of-the-art algorithms for continuous optimization.

- Continuous Optimization Algorithms for Tuning Real and Integer Parameters of Swarm Intelligence Algorithms.

Yuan Z., Montes de Oca M., Birattari M., Stützle T. - Swarm Intelligence, Volume 6, Number 1, Pages 49-75, 2012

- Ant Colony Optimization

Dorigo M., Montes de Oca M., Oliveira S., Stützle T. In Wiley Encyclopedia of Operations Research and Management Science, J. J. Cochran (editors), John Wiley and Sons, Volume 1, Pages 114-125, 2011

- An Incremental Ant Colony Algorithm with Local Search for Continuous Optimization

Liao T., Montes de Oca M., Aydin D., Stützle T., Dorigo M. - In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO'11), N. Krasnogor et al. (editors), ACM Press, Pages 125-132, 2011, Best Paper Award

- An ACO Algorithm Benchmarked on the BBOB Noiseless Function Testbed

Liao, T., Molina, D., Stützle T., Montes de Oca, M.A., Dorigo, M. - GECCO (Companion) 2012: 159-166